Using Cache Effectively on Episerver

episerver

optimizely

In web applications, including Episerver applications, leveraging cache properly is crucial to the performance of your site. As a developer, you will have a number of different types of cache available to you. They all have different purposes, lifecycles and storage locations so it's best to know how and when to use them.

What can be cached In Episerver?

In short, anything can be cached. Cache, although fast, is usually limited by cost or space available.

Commonly cached data:

Data needing to be fetched often from a slower data source The result of calculations or other operations on a set of data Static files Markup/Responses to the end-user It should be used to store data that would take a significant amount of time/resources for the application to generate when needed and data that does not change very often.

Where can it be cached?

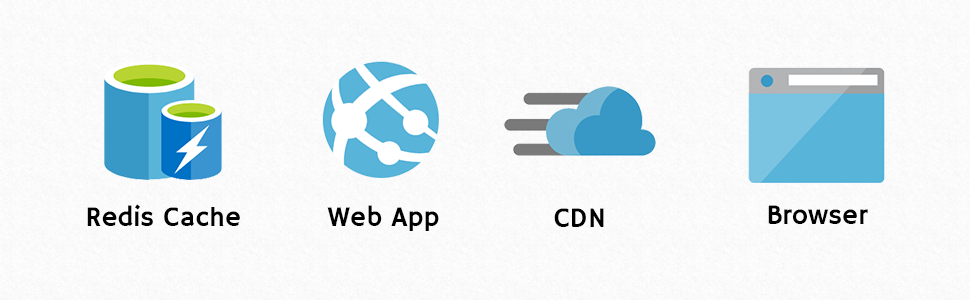

There's a possibility of caching data at nearly every piece of infrastructure in an environment. Let's take a look at a typical .NET application hosted in Azure:

Although the diagram I used above is specific to Azure and includes Redis for Azure, there are many ways of doing the same thing. Information can be stored at any of these places in the environment, including:

- In a persistent cache layer (shown as Redis for Azure)

- Within the web application itself (shown as Azure Web App)

- On the Content Delivery Network (shown as Azure CDN)

- In the website visitor's browser

What Are The Types of Caching in Episerver?

Let's look at some common types of cache (this definitely isn't a full list):

- Output Caching / Request Caching

- File Caching

- Object Caching

Output Cache / Request Cache

An output cache stores the entire result of a request in cache. If you're browsing a website, output cache can store the entire HTML sent to your browser to make it extremely fast to respond if you visit the same page again.

Pros

- Extremely fast

- Saves processing time

Cons

- Needs to be well-configured

- Output cache prevents personalizing in real-time without separate calls post-request

File Caching In Episerver

Some files hosted by the application may not change very often. For these files, you can set response headers when they're requested that will let all downstream cache storage locations: if they can cache it, and if so, for how long before needing to fetch it from the server again.

Pros

- Can provide near-zero latency loading static files

- Prevents repeated requests for static files to the server

- Less requests to the server = less servers needed to handle the same amount of web traffic

Cons

- Can lead to inconsistent end-user experiences by using old copies of files/data

- Cached files do not live on the server, cannot be managed/purged/refreshed until they expire (or cleared intentionally)

Object Caching In Episerver

Last (that I'll write about) but definitely not least is object caching. Object caching can store any type of of objects, allowing for the most versatility. Object cache can have any number of implementations, but it common two are:

- Memory Cache

- Persistent Cache

Each of these types of caching have their own pros and cons and can be used in combination with eachother. So how are they different?

Memory Cache

The most common layer of cache, memory cache. Exactly as it sounds, you can leverage the memory that your application runs with to store any data you wish. There's a number of ways to access memory cache, the most straightforward of which is either IMemoryCache or the MemoryCache class in System.Runtime.Caching.

Pros

- Very fast

- Extremely flexible

Cons

- Tied to your application lifecycle: If the application is restarted, memory cache is cleared. Needs to be rebuilt. If heavy cache is used, site startups can be slow until cache is built.

- App pool specific: If you host many instances of your site to scale, use Episerver DXC or run with autoscale in Azure, each memory cache is independent of the others

Persistent Cache

In direct response to Memory Cache's cons, persistent cache addresses the volatility and independence of direct memory cache. Persistent caches are normally backed by memory cache in some way. For example, Redis is "an in-memory data structure store, used as a database, cache and message broker" and "has built-in replication, transactions and different levels of on-disk persistence". It's a framework on top of a combination of memory cache and disk cache that provides for a persistent, shared, fast cache.

Memory Cache and Episerver

Memory cache is the most common type of cache available to an Episerver application. If you are on Azure or DXC, depending on the amount of traffic to your website, you could be scaling up the amount of instances to support the load. Each of these instances is it's own virtual environment and therefore each one has it's own memory cache. A common issue with memory cache in a scaled environment like that is where one instance removes an item from it's memory cache, but it still exists in the other instances' cache. When caches get out of sync it's possible that two visitors hitting two different instances of your site are shown different data and debugging this can be a painful experience.

Persistent cache, alike Redis, is the best solution for this. On Episerver DXC-Standard, Redis Cache is not included, but I believe it is an addon. Without Redis or another persistent cache offering, you need to take measures to ensure your instance caches stay in line with eachother. Episerver has provided an interface called ISynchronizedObjectInstanceCache. This cache, as the name describes, attempts to synchronize the object cache of running instances.

This synchronization library is built to notify the other running instances of cache removal events for them to also remove from their cache and hopefully prevent them from containing invalid data. Let's look at the code quickly, it's short:

Code from ISynchronizedObjectInstanceCache

public void Insert(string key, object value, CacheEvictionPolicy evictionPolicy)

{

this._localCache.Insert(key, value, evictionPolicy);

}

public object Get(string key)

{

return this._localCache.Get(key);

}

public void Remove(string key)

{

this.RemoveLocal(key);

this.RemoveRemote(key);

}

Based on the code, we see that ISynchronizedObjectInstanceCache does not synchronize insertions, only removals. It will only help keep caches valid by removal, but not identical. Since it only inserts cache values locally it means that each app instance will need to build it's own cache, and will need to do so each time the application starts up or recycles.

Wrapping Up

I highly recommend leveraging cache where you are able to. If you are using Episerver DXC (or another .NET web application in a scaled environment), you should look into cache synchronization options to keep your cache valid on all instances. If you're able to leverage it, you could greatly benefit from a persistent caching option alike Redis.

Please leave a comment below if you found any of this helpful or find a mistake, I'd greatly appreciate it!

If you heavily use cache and your application sees poorer performance when cache is not ready, I strongly recommend you consider a persistent option alike Redis.